Major web companies are adopting HTTP/3, the latest iteration of the HTTP protocol, in their experimental as well as production systems. Last week, Cloudflare announced that its edge network now supports HTTP/3. Earlier this month, Google’s Chrome Canary added support for HTTP/3 and Mozilla Firefox will soon be shipping support in a nightly release this fall. The ‘curl’ command-line client also has support for HTTP/3.

In an announcement, Cloudflare shared that customers can turn on HTTP/3 support for their domains by enabling an option in their dashboards. “We’ve been steadily inviting customers on our HTTP/3 waiting list to turn on the feature (so keep an eye out for an email from us), and in the coming weeks we’ll make the feature available to everyone,” the company added.

Last year, Cloudflare announced preliminary support for QUIC and HTTP/3. Customers could also join a waiting list to try QUIC and HTTP/3 as soon as they become available. Those customers who are on the waiting list and have received an email from Cloudflare can enable the support by flipping the switch from the “Network” tab on the Cloudflare dashboard. Cloudflare further added, “We expect to make the HTTP/3 feature available to all customers in the near future.”

Cloudflare’s HTTP/3 and QUIC support is backed by quiche. It is an implementation of the QUIC transport protocol and HTTP/3 written in Rust. It provides a low-level API for processing QUIC packets and handling connection state.

Why HTTP/3 is introduced

HTTP 1.0 required the creation of a new TCP connection for each request/response exchange between the client and the server, which resulted in latency and scalability issues. To resolve these issues, HTTP/1.1 was introduced. It included critical performance improvements such as keep-alive connections, chunked encoding transfers, byte-range requests, additional caching mechanisms, and more.

The keep-alive or persistent connections allowed clients to reuse TCP connections. A keep-alive connection eliminated the need to constantly perform the initial connection establishment step. It also reduced the slow start across multiple requests. However, there were still some limitations. Multiple requests were able to share a single TCP connection, but they still needed to be serialized on after the other. This meant that the client and server could execute only a single request/response exchange at a time for each connection.

HTTP/2 tried to solve this problem by introducing the concept of HTTP streams. This allowed the transmission of multiple requests/responses over the same connection at the same time. However, the drawback here is that in case of network congestion all requests and responses will be equally affected by packet loss, even if the data that is lost only concerns a single request.

HTTP/3 aims to address the problems in the previous versions of HTTP. It uses a new transport protocol called Quick UDP Internet Connections (QUIC) instead of TCP. The QUIC transport protocol comes with features like stream multiplexing and per-stream flow control.

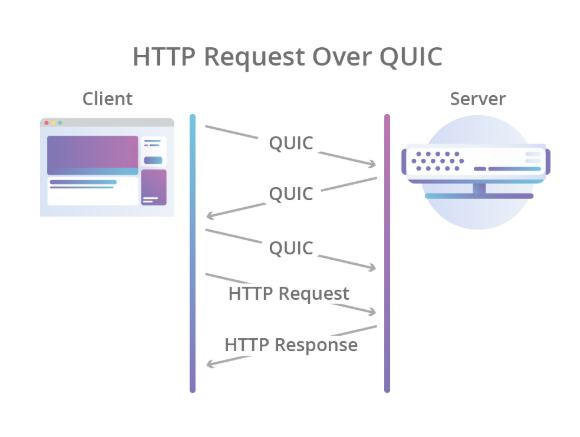

Here’s a diagram depicting the communication between client and server using QUIC and HTTP/3:

Source: Cloudflare

HTTP/3 provides reliability at the stream level and congestion control across the entire connection. QUIC streams share the same QUIC connection so no additional handshakes are required. As QUIC streams are delivered independently, packet loss affecting one stream will not affect the others.

QUIC also combines the typical three-way TCP handshake with TLS 1.3 handshake to provide. This provides users encryption and authentication by default and enables faster connection establishment. “In other words, even when a new QUIC connection is required for the initial request in an HTTP session, the latency incurred before data starts flowing is lower than that of TCP with TLS,” Cloudflare explains.

On Hacker News, a few users discussed the differences between HTTP/1, HTTP/2, and HTTP/3. Comparing the three a user commented, “Not aware of benchmarks, but specification-wise I consider HTTP2 to be a regression…I’d rate them as follows:

HTTP3 > HTTP1.1 > HTTP2

QUIC is an amazing protocol…However, the decision to make HTTP2 traffic go all through a single TCP socket is horrible and makes the protocol very brittle under even the slightest network delay or packet loss…Sure it CAN work better than HTTP1.1 under ideal network conditions, but any network degradation is severely amplified, to a point where even for traffic within a datacenter can amplify network disruption and cause an outage.

HTTP3, however, is a refinement on those ideas and gets pretty much everything right afaik.”

Some expressed that the creators of HTTP/3 should also focus on the “real” issues of HTTP including proper session support and getting rid of cookies. Others appreciated this step saying, “It’s kind of amazing seeing positive things from monopolies and evergreen updates. These institutions can roll out things fast. It’s possible in hardware too– remember Bell Labs in its hay days?”

These were some of the advantages HTTP/3 and QUIC provide over HTTP/2. Read the official announcement by Cloudflare to know more in detail.

Read Next

Cloudflare plans to go public; files S-1 with the SEC

Cloudflare finally launches Warp and Warp Plus after a delay of more than five months

Cloudflare RCA: Major outage was a lot more than “a regular expression went bad”

![How to create sales analysis app in Qlik Sense using DAR method [Tutorial] Financial and Technical Data Analysis Graph Showing Search Findings](https://hub.packtpub.com/wp-content/uploads/2018/08/iStock-877278574-218x150.jpg)