Open AI and Google have introduced a new technique called “Activation atlases” for visualizing the interactions between neurons. This technique aims to provide a better understanding of the internal decision-making processes of AI systems and identify their weakness and failures. Activation atlases are built on ‘feature visualization’, a technique for understanding what the hidden layers of neural networks can represent and in turn make machine learning more accessible and interpretable.

“Because essential details of these systems are learned during the automated training process, understanding how a network goes about its given task can sometimes remain a bit of a mystery”, says Google.

Activation Atlases will simply answer the question of what an image classification neural network actually “sees” when provided with an image, thus giving users an insight into the hidden layers of a network.

Open AI states that “With activation atlases, humans can discover unanticipated issues in neural networks–for example, places where the network is relying on spurious correlations to classify images, or where re-using a feature between two classes lead to strange bugs. Humans can even use this understanding to “attack” the model, modifying images to fool it.”

Working of Activation Atlases

Activation atlases are built from a convolutional image classification network, Inceptionv1, trained on the ImageNet dataset. This network progressively evaluates image data through about ten layers. Every layer is made of hundreds of neurons and every neuron activates to varying degrees on different types of image patches.

An activation atlas is built by collecting the internal activations from each of these layers of the neural network from the images. These activations are represented by a complex set of high-dimensional vectors and are projected into useful 2D layouts via UMAP.

The activation vectors then need to be aggregated into a more manageable number. To do this, a grid is drawn over the 2D layout that was created. For every cell in the grid, all the activations that lie within the boundaries of that cell are averaged. ‘Feature visualization’ is then used to create the final representation.

An example of Activation Atlas

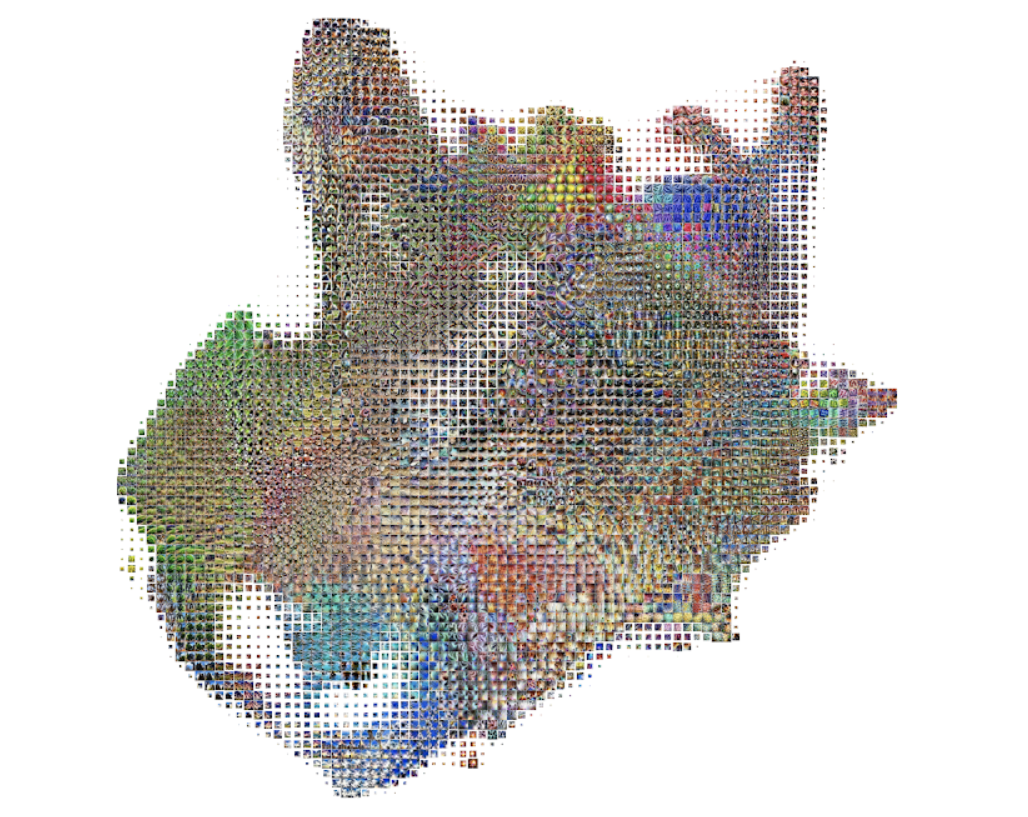

Here is an activation atlas for just one layer in a neural network:

An overview of an activation atlas for one of the many layers within Inception v1

Source: Google AI blog

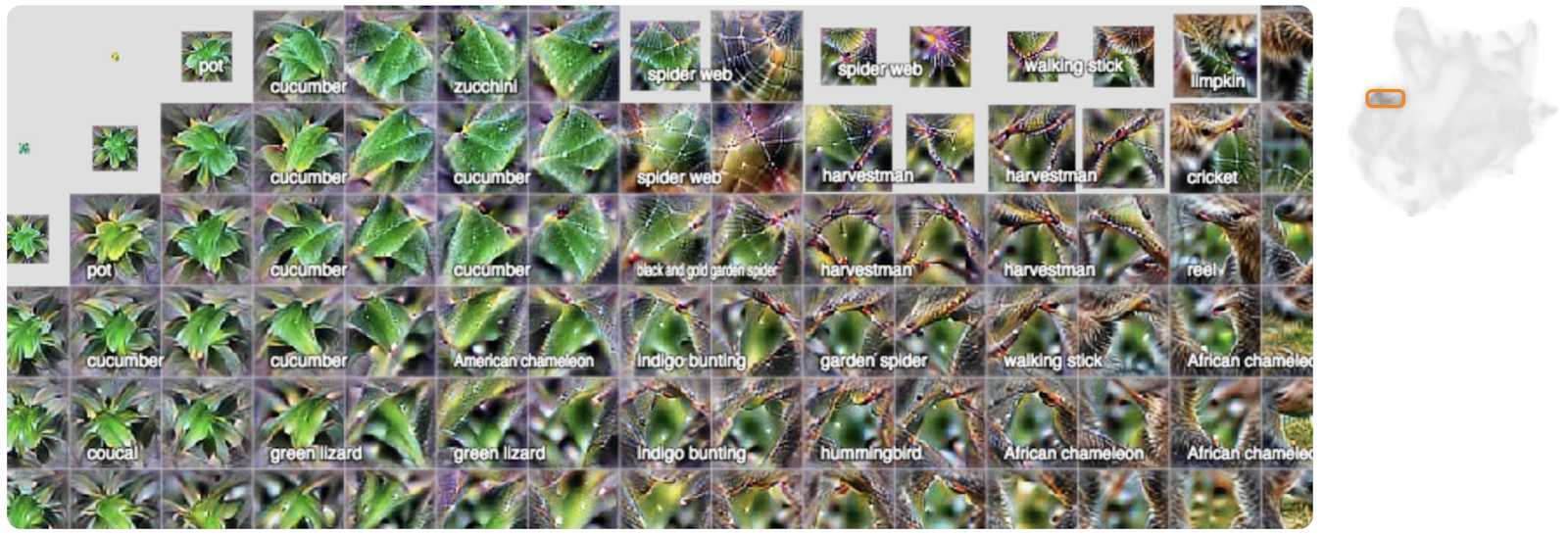

Detectors for different types of leaves and plants

Source: Google AI blog

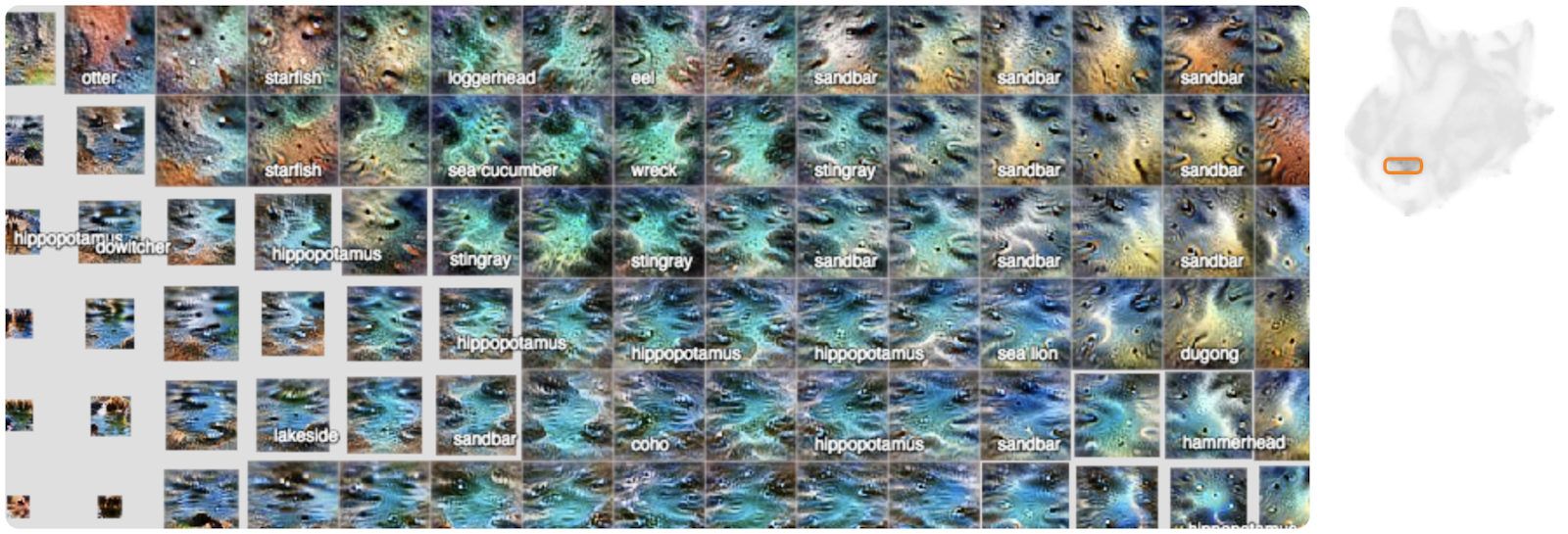

Detectors for water, lakes and sandbars

Source: Google AI blog

Researchers hope that this paper will provide users with a new way to peer into convolutional vision networks. This, in turn, will enable them to see the inner workings of complicated AI systems in a simplified way.

Read Next

Introducing Open AI’s Reptile: The latest scalable meta-learning Algorithm on the block

AI Village shares its perspective on OpenAI’s decision to release a limited version of GPT-2

OpenAI team publishes a paper arguing that long term AI safety research needs social scientists

![How to create sales analysis app in Qlik Sense using DAR method [Tutorial] Financial and Technical Data Analysis Graph Showing Search Findings](https://hub.packtpub.com/wp-content/uploads/2018/08/iStock-877278574-218x150.jpg)