In this article by, Raja Malleswara Rao Pattamsetti, the author of the book Distributed Computing in Java 9, the author is going to look into the processing capabilities for organizational applications are more than a chronological computer configuration that can be addressed to an extent by increasing the processor capacity and other resource allocation. While this can alleviate the performance for a while, the future computational requirements are restricted for adding more powerful computational processors and cost incurred in producing such powerful systems. Also, there is a need to produce efficient algorithms and practices to produce the best result out of it. A practical and economic substitute for these single high-power computers is to establish multiple low-power capacity processors that work collectively and organize their processing capabilities, which results in a powerful system, that is, parallel computers that permit the processing activities to be distributed among multiple low-capacity computers and together obtain the best expected result.

In this article, we will cover the following topics:

- Era of Computing

- Commanding Parallel System Architectures

- MPP: Massively Parallel Processors

- SMP: Symmetric Multiprocessors

- CC-NUMA: Cache Coherent Nonuniform Memory Access

- Distributed Systems

- Clusters

- Java support for High-Performance Computing

(For more resources related to this topic, see here.)

Era of Computing

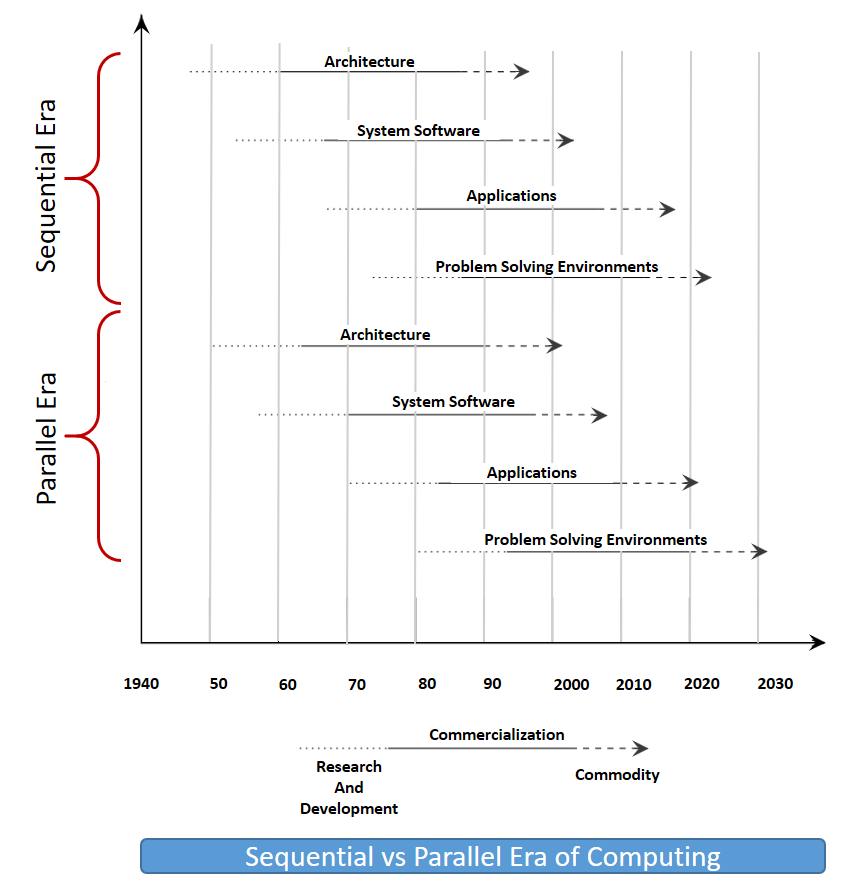

The technological developments are rapid in the industry of computing with the help of advancements in system software and hardware. The hardware advancements are around the processor advancements and their making techniques, and high-performance processors are getting generated in amazingly low cost. The hardware advancements are further boosted by the high-bandwidth and low-latency network systems. Very Large Scale Integration (VLSI) as a concept brought several phenomenal advancements in producing commanding chronological and parallel processing computers. Simultaneously, the System Software advancements have improved the ability of operating system, advanced software programming development techniques. It was observed as two commanding computing eras, namely Sequential and Parallel Era of Computing. The following diagram shows the advancements in both the Era of Computing from last few decades to the forecast for next two decades:

In each of these era, it is observed that the hardware architecture growth is trailed by the system software advancements, which mean that as there was more powerful hardware evolved, correspondingly advanced software programs and operating system capacities doubled its strength. As the applications and problem solving environments are added to this with the advent of parallel computing, mini to microprocessor development, clustered computers.

Let’s now review some of the commanding parallel system architectures from the last few years.

Commanding parallel system architectures

From the last few years, multiple varieties of computing models provisioning great processing performance have developed. They are classified depending on the memory allocated to their processors and their alignment are designed. Some of the important parallel systems are as follows:

- MPP: Massively Parallel Processors

- SMP: Symmetric Multiprocessors

- CC-NUMA: Cache Coherent Nonuniform Memory Access

- Distributed Systems

- Clusters

Let’s now understand a little more detail about each of these system architectures and review some of their important performance characteristics.

Massively Parallel Processors (MPP)

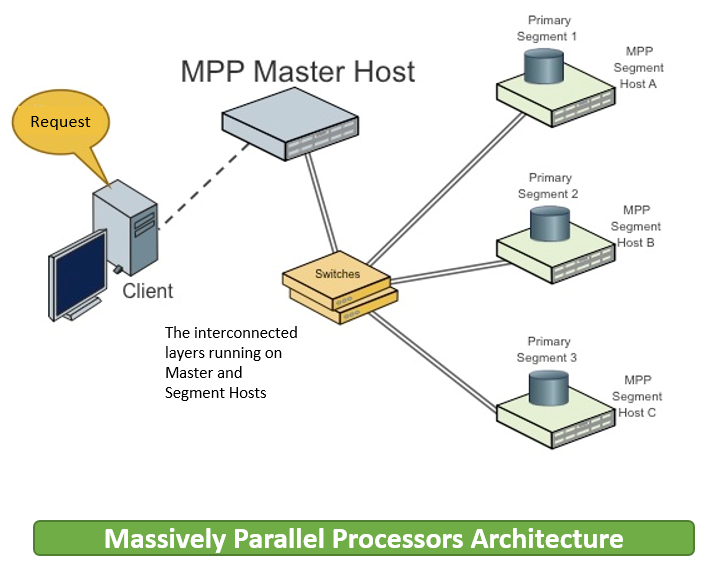

Massively Parallel Processors (MPP), as the name advises, are a huge parallel processing system developed in no sharing architecture. Such systems usually contain large number of processing nodes that are integrated with a high-speed interconnected network switch. Node is nothing but an element of computer with diverse hardware component combination, usually containing a memory unit and more than on processor. Some of the purpose nodes are designed to have backup disks or additional storage capacity.

The following diagram represents the massively parallel processors architecture:

Symmetric Multiprocessors (SMP)

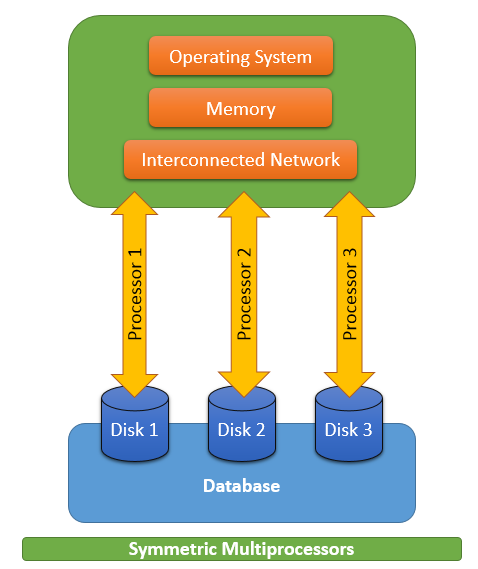

Symmetric Multiprocessors (SMP), as the name advises, contain a set of limited number of processors ranging from 2 to 64 processors and share most of the resources among those processors. One instance of the operating system will be operating together on all these connected processors while they commonly share the I/O, memory, and the network bus. Based on the nature of similar set of processors connected and acting together as on operating system is the essential behavior of symmetric multiprocessors.

The following diagram depicts the symmetric multiprocessors representation:

Cache Coherent Nonuniform Memory Access (CC-NUMA)

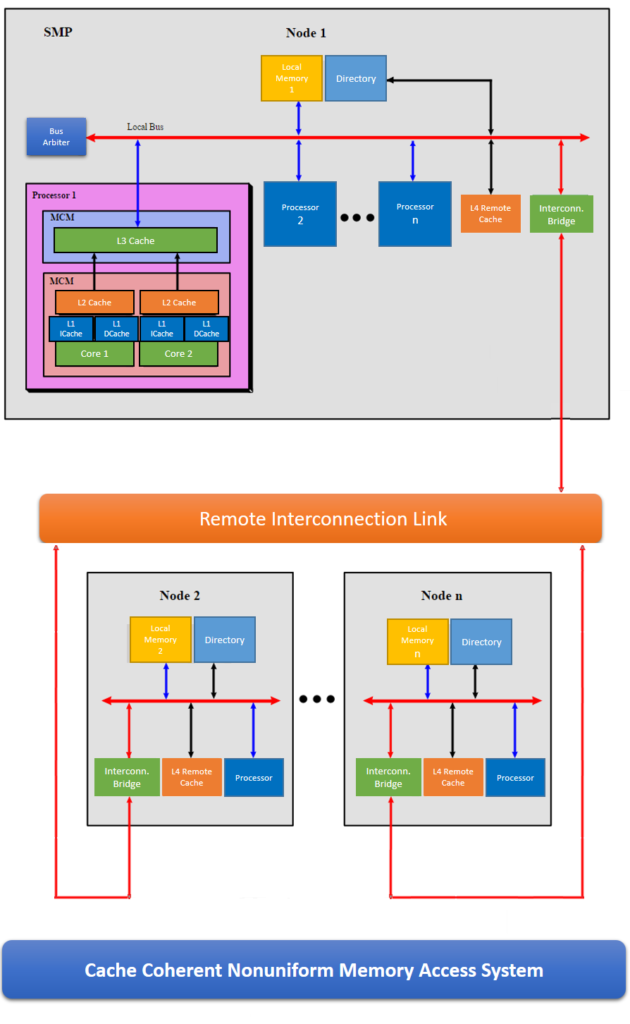

The following diagram represents the cache coherent nonuniform memory access architecture:

The nature of memory access is nonuniform. Just Like the symmetric multiprocessors, CC-NUMA system is a comprehensive sight to entire system memory, and as the name advises, it takes nonuniform time to access either the close or distant memory locations.

Distributed systems

Distributed systems, as we have been discussing from previous articles, are traditional individual set of computers interconnected through an Internet/intranet running on their own operating system. Any diverse set of computer systems can contribute to be part of the distributed system with this expectation, including the combinations of Massively Parallel Processors, Symmetric Multiprocessors, distinct computers, and clusters.

Clusters

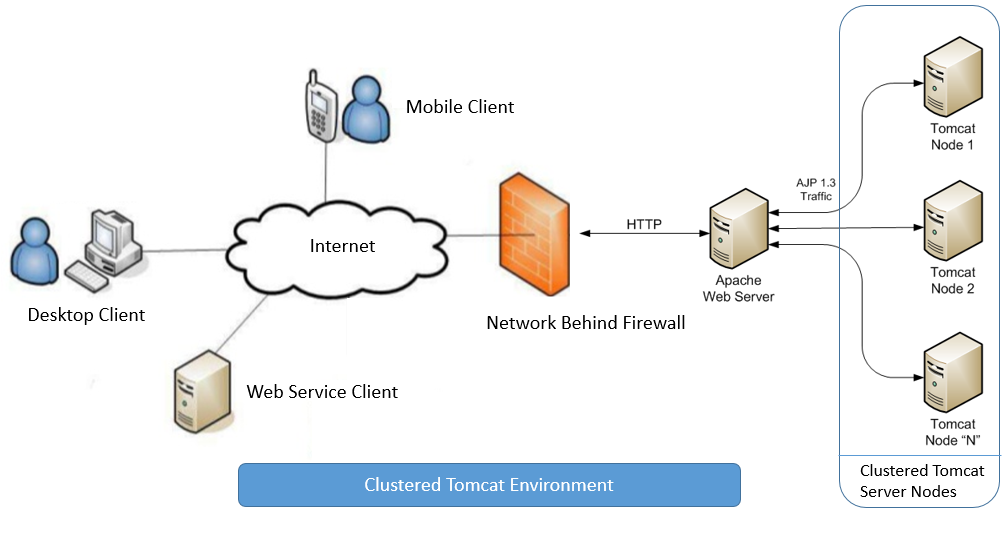

Cluster is an assembly of terminals or computers that are assembled through internetwork connection to address a large processing requirement through parallel processing. Hence, the clusters are usually configured with terminals or computers having higher processing capabilities connected with high-speed internetwork. Usually, Cluster is considered as one image that integrates a number of nodes with a group of resource utilization.

The following diagram is a sample clustered tomcat server for a typical J2EE web application deployment:

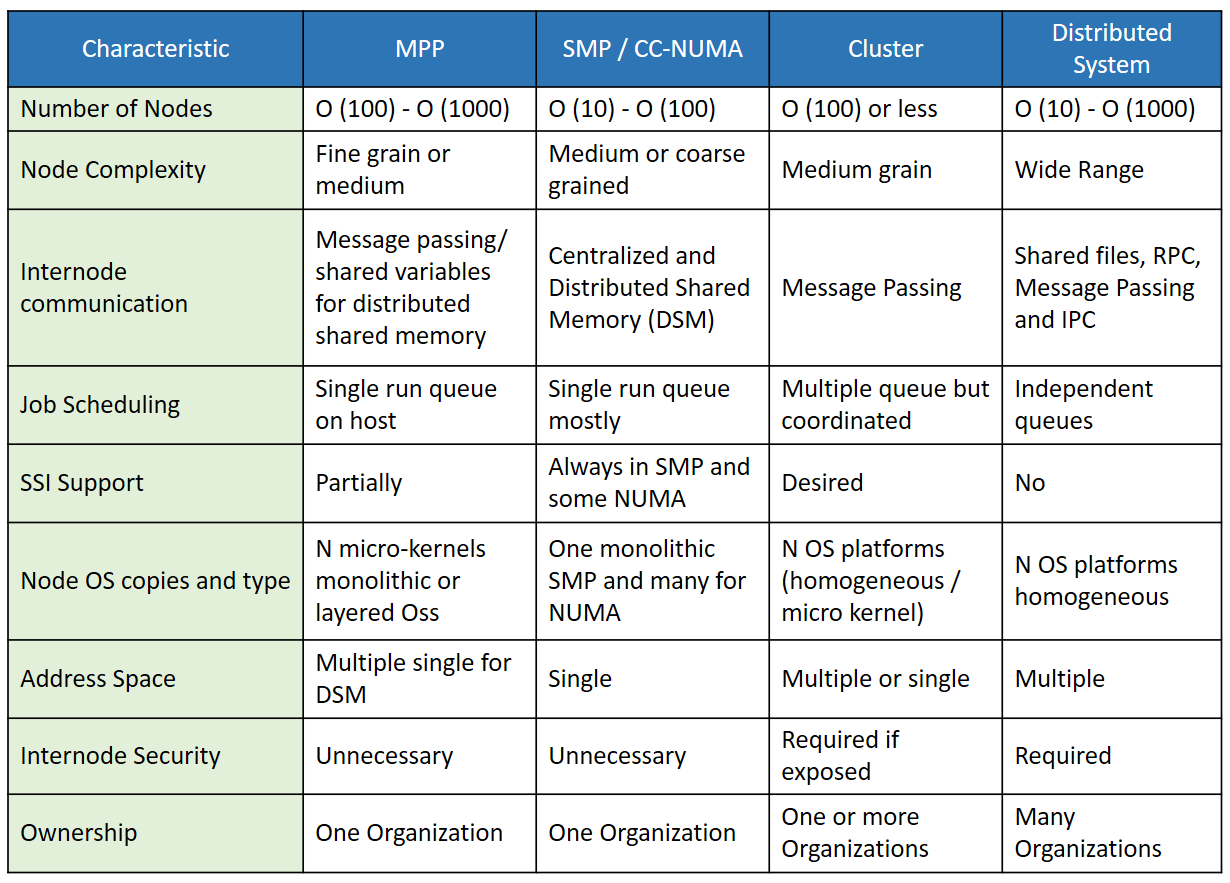

The following table shows some of the important performance characteristics of the different parallel architecture systems discussed so far:

Network of workstations

A network of workstations is a group of resources connected like system processors, interface for networks, storage, and data disks that open the space for newer combinations such as:

- Parallel Computing: As discussed in the previous sections, the group of system processor can be connected as MPP or DSM, which can obtain the parallel processing ability.

- RAM Network: As a number of systems are connected, each system memory can collectively work as DRAM cache, which intensely expand the virtual memory for the entire system to improve its processing ability.

- Software Redundant Array of Inexpensive Disks (RAID): As a group of systems are used in the network connected in array improve the system stability, availability, and memory capacity with the help of low-cost multiple systems connected in the local area network. This also gives the simultaneous I/O system support.

- Multipath Communication: Multipath communication is a technique of using more than one network connection between the network of workstations to allow simultaneous information exchange among system nodes.

Java support for High-Performance Computing

Java is providing some numerous advantages for HPC (High-Performance Computing) as a programming language, particularly with Grid computing. Some of the key benefits of using Java in HPC include the following:

- Portability: The capability to write the programming in one platform and port it to run on any other operating platform has been the biggest strength of Java language. This continue to be the advantage when porting Java applications to HPC systems. In Grid computing, this is an essential feature, as the execution environment gets decided during the execution of the program. This is possible since the Java byte code executes in Java Virtual Machine (JVM), which itself acts as an abstract operating environment.

- Network Centricity: As discussed in previous articles, Java provides a great support for distributed systems with its network centric feature of remote procedure calls (RPC) through RMI and CORBA services. Along with these, Java support for socket programming is another great support for grid computing.

- Software Engineering: Another great feature of Java is the way it can be used for engineering the solutions. We can produce more object-oriented, loosely coupled software that can be independently added as API through jar files in multiple other systems. Encapsulation and Interface programming makes it a great advantage in such application development.

- Security: Java is highly secure programming language with its feature like byte code verification to limit the resource utilization by an untrusted intruder software or program. This is a great advantage when running application on distributed environment with remote communication established over shared network.

- GUI development: Java support for platform independent GUI development is a great advantage to develop and deploy the enterprise web applications over a HPC environment to interact with.

- Availability: Java is having a great supporting from multiple operating system including Windows, Linux, SGI, and Compaq. It is easily available to consume and develop using a great set of open source frameworks developed on top of it.

While the advantages listed in the previous points in favor of using Java for HPC environments, some concerns need to be reviewed while designing Java applications, including its numerics (complex numbers, fastfp, multidimensional), performance, and parallel computing designs.

Summary

Through this article, you have learned about era of computing, commanding parallel, system architectures, MPP: Massively Parallel Processors, SMP: Symmetric Multiprocessors, CC-NUMA: Cache Coherent Nonuniform Memory Access, Distributed Systems, Clusters, Java support for High-Performance Computing

Resources for Article:

Further resources on this subject:

- The Amazon S3 SDK for Java [article]

- Gradle with the Java Plugin [article]

- Getting Started with Sorting Algorithms in Java [article]

![How to create sales analysis app in Qlik Sense using DAR method [Tutorial] Financial and Technical Data Analysis Graph Showing Search Findings](https://hub.packtpub.com/wp-content/uploads/2018/08/iStock-877278574-218x150.jpg)

![Using Python Automation to interact with network devices [Tutorial] Why choose Ansible for your automation and configuration management needs?](https://hub.packtpub.com/wp-content/uploads/2018/03/Image_584-100x70.png)