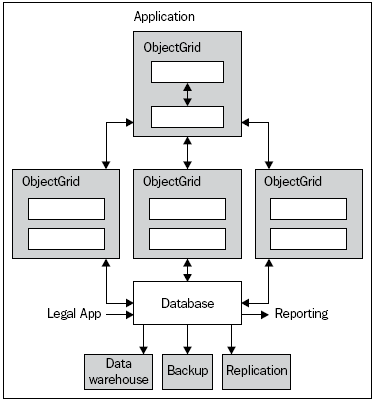

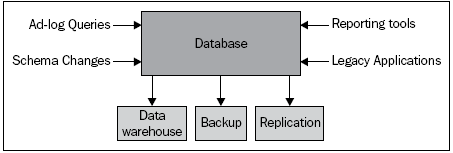

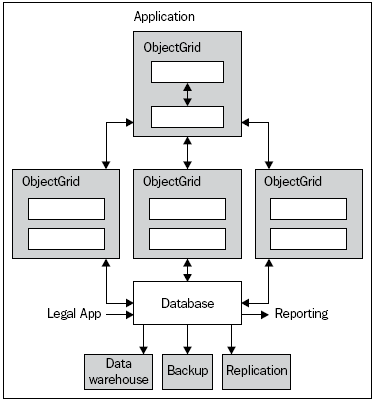

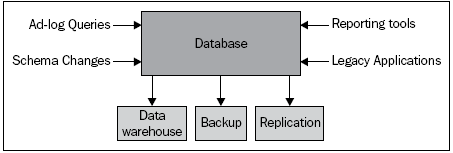

As stated above there are three compelling reasons to integrate with a database backend. First, reporting tools do not have good data grid integration. Using CrystalReports and other reporting tools, don't work with data grids right now. Loading data from a data grid into a data warehouse with existing tools isn't possible either.

The second reason we want to use a database with a data grid is when we have an extremely large data set. A data grid stores data in memory. Though much cheaper than in the past, system memory is still much more expensive than a typical magnetic hard disk. When dealing with extremely large data sets, we want to structure our data so that the most frequently used data is in the cache and less frequently used data is on the disk.

The third compelling reason to use a database with a data grid is that our application may need to work with legacy applications that have been using relational databases for years. Our application may need to provide more data to them, or operate on data already in the legacy database in order to stay ahead of a processing load.In this article, we will explore some of the good and not-so-good uses of an in-memory data grid. We'll also look at integrating Websphere eXtreme Scale with relational databases.

You're going where?

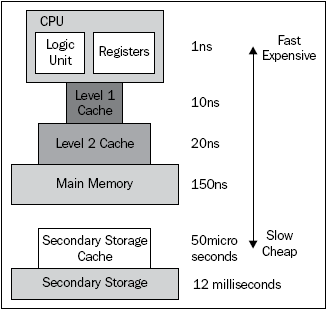

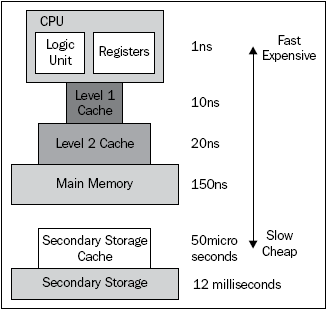

Somewhere along the way, we all learned that software consists of algorithms and data. CPUs load instructions from our compiled algorithms, and those instructions operate on bits representing our data. The closer our data lives to the CPU, the faster our algorithm can use it. On the x86 CPU, the registers are the closest we can store data to the instructions executed by the CPU.

CPU registers are also the smallest and most expensive data storage location. The amount of data storable in registers is fixed because the number and size of CPU registers is fixed. Typically, we don't directly interact with registers because their correct usage is important to our application performance. We let the compiler writers handle translating our algorithms into machine code. The machine code knows better than we do, and will use register storage far more effectively than we will most of the time.

Less expensive, and about an order of magnitude slower, we have the Level 1 cache on a CPU (see below). The Level 1 cache holds significantly more data than the combined storage capacity of the CPU registers. Reading data from the Level 1 cache, and copying it to a register, is still very fast. The Level 1 cache on my laptop has two 32K instruction caches, and two 32K data caches.

Still less expensive, and another order of magnitude slower, is the Level 2 cache. The Level 2 cache is typically much larger than Level 1 cache. I have 4MB of the Level 2 cache on my laptop. It still won't fit the contents of the Library of Congress into that 4MB, but that 4MB isn't a bad amount of data to keep near the CPU.

Up another level, we come to the main system memory. Consumer level PCs come with 4GB RAM. A low-end server won't have any less than 8GB. At this point, we can safely store a large chunk of data, if not all of the data, used by an application. Once the application exits, its data is unloaded from the main memory, and all of the data is lost. In fact, once our data is evicted from any storage at or below this level, it is lost. Our data is ephemeral unless it is put onto some secondary storage. The unit of measurement for accessing data in a register, either Level 1 or 2 cache and main memory, is a nanosecond.

Getting to secondary storage, we jump up an SI-prefix to a microsecond. Accessing data in the secondary storage cache is on the order of microseconds. If the data is not in cache, the access time is on the order of milliseconds. Accessing data on a hard drive platter is one million times slower than accessing that same data in main memory, and one billion times slower than accessing that data in a register. However, secondary storage is very cheap and holds millions of times more than primary storage. Data stored in secondary storage is durable. It doesn't disappear when the computer is reset after a crash.

Our operation teams comfortably build secondary storage silos to store petabytes of data. We typically build our applications so the application server interacts with some relational database management system that sits in front of that storage silo. The network hop to communicate with the RDBMS is in the order of microseconds on a fast network, and milliseconds otherwise.

Sharing data between applications has been done with the disk + network + database approach for a long time. It's become the traditional way to build applications. Load balancer in front, application servers or batch processes constantly communicating with a database to store data for the next process that needs it.

As we see with computer architecture, we insert data where it fits. We squeeze it as close to the CPU as possible for better performance. If a data segment doesn't fit in one level, keep squeezing what fits into each higher storage level. That leaves us with a lot of unused memory and disk space in an application deployment. Storing data in the memory is preferable to storing it on a hard drive. Memory segmentation in a deployment has made it difficult to store useful amounts of data at a few milliseconds distance. We just use a massive, but slow, database instead.

Where does an IMDG fit?

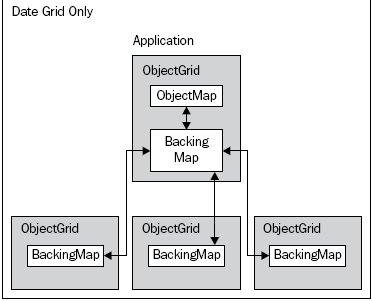

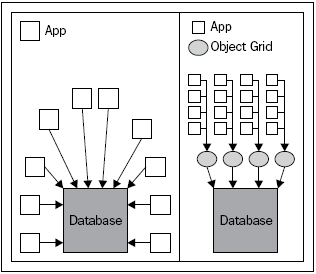

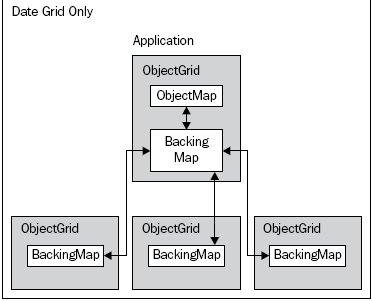

We've used ObjectGrid to store all of our data so far. This diagram should look pretty familiar by now:

Because we're only using the ObjectGrid APIs, our data is stored in-memory. It is not persisted to disk. If our ObjectGrid servers crash, then our data is in jeopardy (we haven't covered replication yet). One way to get our data into a persistent store is to mark up our classes with some ORM framework like JPA. We can use the JPA API to persist, update, and remove our objects from a database after we perform the same operations on them using the ObjectMap or Entity APIs. The onus is on the application developer to keep both cache and database in sync:

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at $19.99/month. Cancel anytime

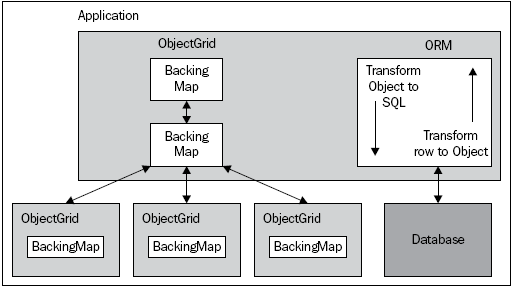

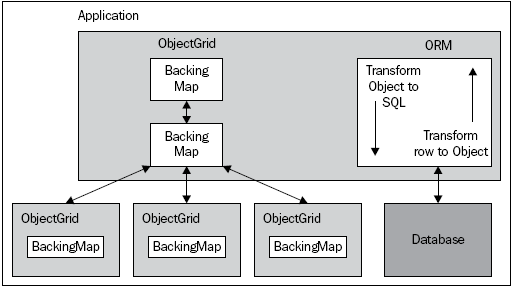

If you take this approach, then all of the effort would be for naught. Websphere eXtreme Scale provides functionality to integrate with an ORM framework, or any data store, through Loaders. A Loader is a BackingMap plugin that tells ObjectGrid how to transform an object into the desired output form. Typically, we'll use a Loader with an ORM specification like JPA. Websphere eXtreme Scale comes with a few different Loaders out of the box, but we can always write our own.

A Loader works in the background, transforming operations on objects into some output, whether it's file output or SQL queries. A Loader plugs into a BackingMap in an ObjectGrid server instance, or in a local ObjectGrid instance. A Loader does not plug into a client-side BackingMap, though we can override Loader settings on a client-side BackingMap.

While the Loader runs in the background, we interact with an ObjectGrid instance. We use the ObjectMap API for objects with zero or simple relationships, and the Entity API for objects with more complex relationships. The Loader handles all of the details in transforming an object into something that can integrate with external data stores:

Why is storing our data in a database so important? Haven't we seen how much faster Websphere eXtreme Scale is than an RDBMS? Shouldn't all of our data be stored in in-memory? An in-memory data grid is good for certain things. There are plenty of things that a traditional RDBMS is good at that any IMDG just doesn't support.

An obvious issue is that memory is significantly more expensive than hard drives. 8GB of server grade memory costs thousands of dollars. 8GB of server grade disk space costs pennies. Even though the disk is slower than memory, we can store a lot more data on it.

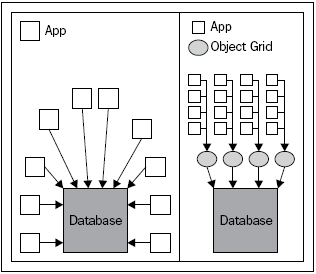

An IMDG shines where a sizeable portion of frequently-changing data can be cached so that all clients see the same data. The IMDG provides orders of magnitude with better latency, read, and write speeds than any RDBMS. But we need to be aware that, for large data sets, an entire data set may not fit in a typical IMDG. If we focus on the frequently-changing data that must be available to all clients, then using the IMDG makes sense.

Imagine a deployment with 10 servers, each with 64GB of memory. Let's say that of the 64GB, we can use 50GB for ObjectGrid. For a 1TB data set, we can store 50% of it in cache. That's great! As the data set grows to 5TB, we can fit 10% in cache. That's not as good as 50%, but if it is the 10% of the data that is accessed most frequently, then we come out ahead. If that 10% of data has a lot of writes to it, then we come out ahead.

Websphere eXtreme Scale gives us predictable, dynamic, and linear scalability. When our data set grows to 100TB, and the IMDG holds only 0.5% of the total data set, we can add more nodes to the IMDG and increase the total percentage of cacheable data (see below). It's important to note that this predictable scalability is immensely valuable. Predictable scalability makes capacity planning easier. It makes hardware procurement easier because you know what you need. Linear scalability provides a graceful way to grow a deployment as usage and data grow. You can rest easy knowing the limits of your application when it's using an IMDG. The IMDG also acts as a shock absorber in front of a database. We're going to explore some of the reasons why an IMDG makes a good shock absorber with the Loader functionality.

There are plenty of other situations, some that we have already covered, where an IMDG is the correct tool for the job. There are also plenty of situations where an IMDG just doesn't fit.

A traditional RDBMS has thousands of man-years of research, implementation tuning, and bug fixing already put into it. An RDBMS is well-understood and is easy to use in application development. There are standard APIs for interacting with them in almost any language:

In-memory data grids don't have the supporting tools built around them that RDBMSs have. We can't plug CrystalReports into an ObjectGrid instance to get daily reports out of the data in the grid. Querying the grid is useful when we run simple queries, but fails when we need to run the query over the entire data set, or run a complex query. The query engine in Websphere eXtreme Scale is not as sophisticated as the query engine in an RDBMS. This also means the data we get from ad hoc queries is limited. Running ad hoc queries in the first place is more difficult. Even building an ad hoc query runner that interacts with an IMDG is of limited usefulness.

An RDBMS is a wonderful cross-platform data store. Websphere eXtreme Scale is written in Java and only deals with Java objects. A simple way for an organization to share data between applications is in a plaintext database. We have standard APIs for database access in nearly every programming language. As long as we use the supported database driver and API, we will get the results as we expect, including ORM frameworks from other platforms like .NET and Rails. We could go on and on about why an RDBMS needs to be in place, but I think the point is clear. It's something we still need to make our software as useful as possible.

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

Singapore

Singapore

Canary Islands

Canary Islands

Hungary

Hungary

Ukraine

Ukraine

Luxembourg

Luxembourg

Estonia

Estonia

Lithuania

Lithuania

South Korea

South Korea

Turkey

Turkey

Switzerland

Switzerland

Colombia

Colombia

Taiwan

Taiwan

Chile

Chile

Norway

Norway

Ecuador

Ecuador

Indonesia

Indonesia

New Zealand

New Zealand

Cyprus

Cyprus

Denmark

Denmark

Finland

Finland

Poland

Poland

Malta

Malta

Czechia

Czechia

Austria

Austria

Sweden

Sweden

Italy

Italy

Egypt

Egypt

Belgium

Belgium

Portugal

Portugal

Slovenia

Slovenia

Ireland

Ireland

Romania

Romania

Greece

Greece

Argentina

Argentina

Netherlands

Netherlands

Bulgaria

Bulgaria

Latvia

Latvia

South Africa

South Africa

Malaysia

Malaysia

Japan

Japan

Slovakia

Slovakia

Philippines

Philippines

Mexico

Mexico

Thailand

Thailand