Korean researchers have developed a GAN that can achieve image translation in challenging cases. Instance-aware GAN or InstaGAN as the authors call it can achieve image translation in various scenarios showing better results than CycleGAN in specific problems. It can swap pants with skirts and giraffes with sheeps. The system is designed by a student and an assistant professor from Korea Advanced Institute of Science and Technology and an assistant professor from the Pohang University of Science and Technology. They have published their results in a paper titled InstaGAN: Instance-Aware Image-to-Image Translation, last week.

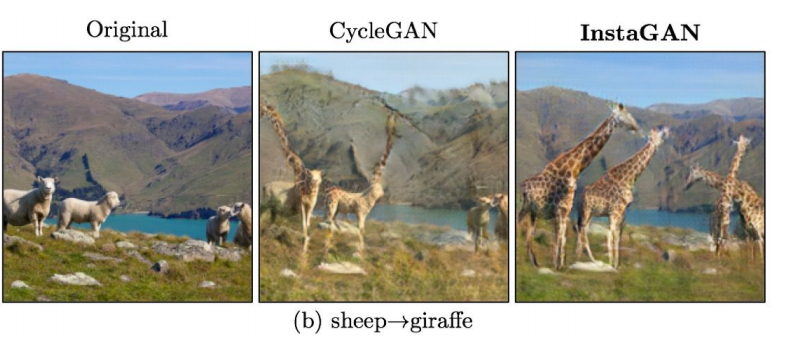

Systems that map images aren’t new but the authors of the paper say that they are the first to report image to image translation in multi-instance transfiguration tasks. The methods used in image to image translation before InstaGAN often failed in challenging cases, like multi-instance transfiguration where significant changes are involved. A multi-instance transfiguration task involves multiple individual objects present in an image. The objective here is to swap objects in an image without changing the background scene.

InstaGAN uses the instance information such as object segmentation masks improving on the challenging areas for image to image transformation. The method showed in the paper translates an image as well as its instance attributes. They introduce a context-preserving loss, which encourages the network to learn the identity function outside the target instances. A sequential mini-batch training technique handles multiple instances when using a limited GPU memory. This also enhances the network to generalize better when multiple instances are involved.

The researchers compared InstaGAN with CycleGAN and doubled the number of parameters for CycleGAN. This is done for a fair comparison as InstaGAN uses two networks for image and masks. In areas where CycleGAN fails, the new method generates ‘reasonable shapes’. InstaGAN preserves the background while making changes to the objects in images where CycleGAN is unable to maintain the original background.

Source: InstaGAN: Instance-Aware Image-to-Image Translation

The authors said that their ideas of using the set-structured side information have potential applications in other cross-domain generation tasks such as neural machine translation or video generation.

For more details, examples of the model where images are swapped, check out the research paper.

Read next

Generative Adversarial Networks: Generate images using Keras GAN [Tutorial]

What are generative adversarial networks (GANs) and how do they work? [Video]

![How to create sales analysis app in Qlik Sense using DAR method [Tutorial] Financial and Technical Data Analysis Graph Showing Search Findings](https://hub.packtpub.com/wp-content/uploads/2018/08/iStock-877278574-218x150.jpg)