(For more resources related to this topic, see here.)

Understanding the Oracle RDBMS

The term RDBMS, or Relational Database Management System can be used in many ways and, more often than not, simply refers to a commercial database management product. However, the true meaning of the term RDBMS refers to the way the product operates internally to store and retrieve data. There are many commercial and open source database management systems available today. While they all attempt to interface with the user in a manner that is generally compliant with international standards, they all operate differently internally. We will explore the characteristics that make the Oracle RDBMS unique and, in doing so, understand how it works.

Distinguishing between an instance and a database

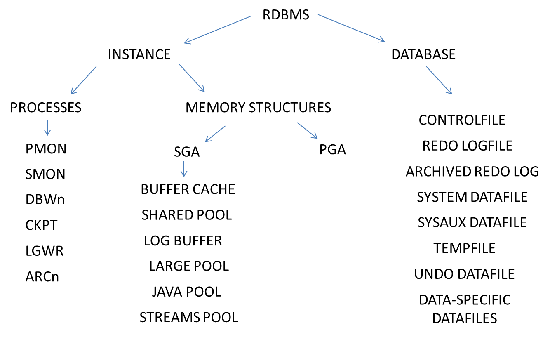

To define the Oracle database architecture, we must first define two terms and make a distinction between them. An instance is the set of background processes and memory structures that enable the Oracle kernel to operate. A database is the set of files that stores the data contained in the RDBMS. These two entities account for the three basic resources available to computers, namely the CPU, RAM, and disk. The instance represents the usage of the CPU and RAM, while the database represents the usage of the disk. These definitions are architecturally oriented, and the distinction between the two isn't always fully recognized. In fact, the two are often used interchangeably. In most database configurations, it is reasonable to do so since, on a single server, the instance and database operate together. However, it's important to draw a distinction at this point, for three reasons. First, the terms themselves are used differently with other RDBMS products, such as Microsoft SQL Server. Secondly, when investigating the Oracle architecture, it is simpler to see the relationship between the various architectural components if we divide them accordingly. Lastly, although an instance and a database generally operate together on a single server, other database configurations are possible. In Oracle's Real Application Clusters, or RAC, the instance and database are both logically and physically separated. We will examine the subject of RAC later in the article.

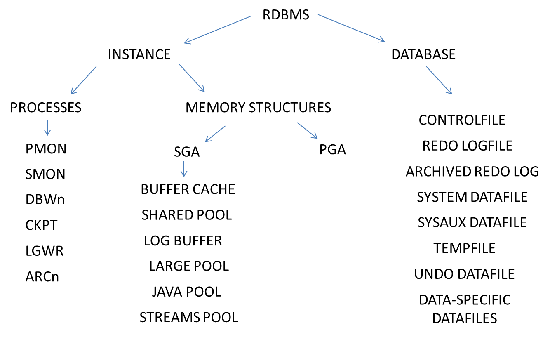

The following diagram gives us a broad look of the Oracle architecture as a whole. We will refer back to portions of it throughout the article:

Note that this is a logical diagram that groups similar functions together. We display this as a reverse tree diagram. The top of the tree is the RDBMS itself. From there, it branches into the instance and the database, the terms we have just defined. We will look at each branch of this diagram and examine the individual components, as well as how they operate and contribute to the inner working of the Oracle RDBMS.

Examining the Oracle instance

As we mentioned before, the Oracle instance is composed of the necessary processes and memory structures required to operate the Oracle kernel. The instance is the first thing invoked when the database is started.

Recognizing the primary Oracle processes

The first components of the Oracle instance that we will examine are the Oracle background processes. These processes run in the background of the operating system and are not interacted with directly. Each process is highly specialized and has a specific function in the overall operation of the Oracle kernel. While these processes accomplish the same functions regardless of the host operating system, their implementation is significantly different. On Unix-based systems, owing to Unix's multiprocess architecture, each Oracle process runs as a separate operating system process. Thus, we can actually see the processes themselves from within the operating system.

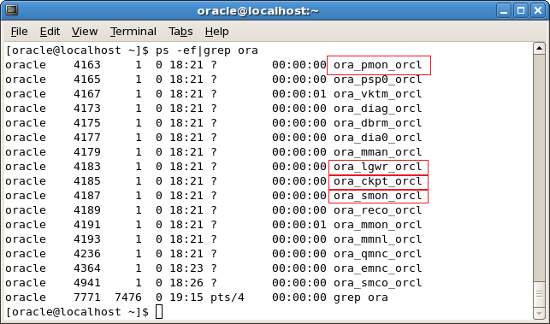

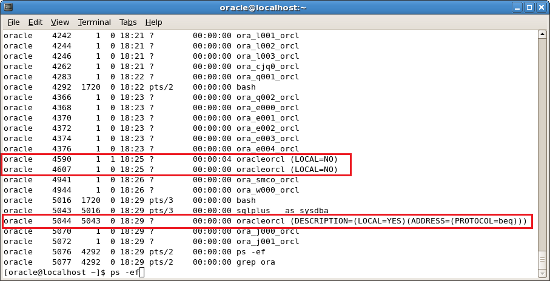

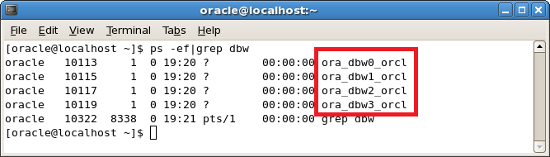

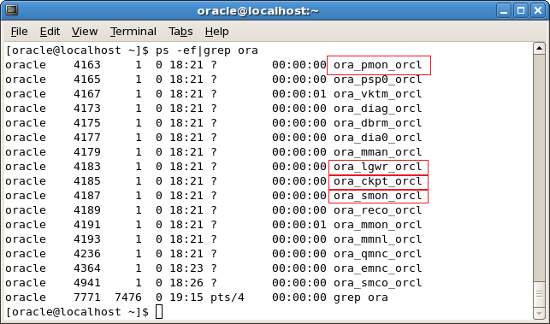

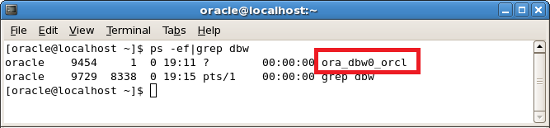

For instance, we can use the ps command on Linux to see these processes, as shown in the following screenshot. We've highlighted a few of them that we will examine in depth. Note that our background processes are named in the format ora_ processtype_SID. Since the SID for our database is ORCL, that name forms a part of the full process name:

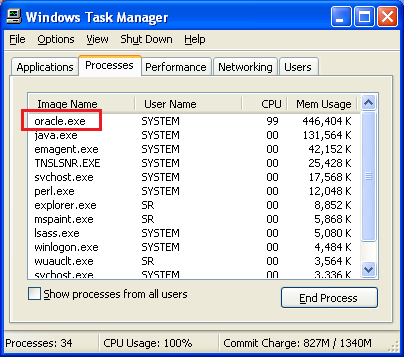

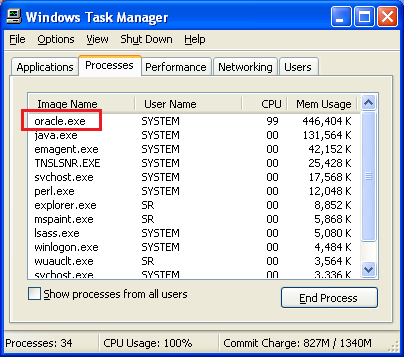

On Windows, rather than implementing each Oracle process as a separate OS process, the Oracle processes are implemented as threads since Windows is a multithreaded operating system. As a result, the Oracle kernel runs under a single executable called Oracle.exe. The background processes then run as threads under that single process. So, if we attempt to see the Oracle background processes using Windows's Task Manager, we only see the Oracle.exe executable, as shown in the next screenshot. The threads representing the Oracle processes are masked to us, at least at the operating system level:

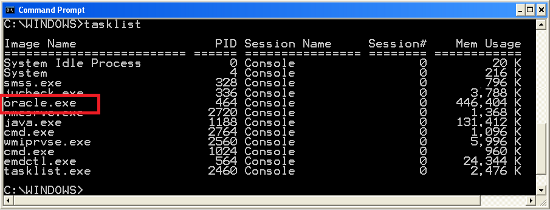

We can also display this information from the Windows command line by running the tasklist command:

Regardless of whether we can see each process directly from the operating system, once the instance starts, they are present. Each has a specific job and all run in concert to service the needs of the database.

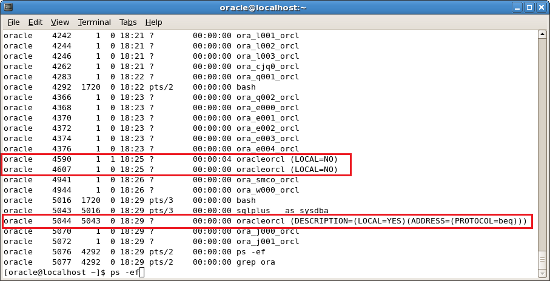

PMON – the Process Monitor

The core process of the Oracle architecture is the PMON process—the Process Monitor. The PMON is tasked with monitoring and regulating all other Oracle-related processes. This includes not only background processes but server processes as well. Most databases run in a dedicated server mode. In this mode, any user that connects to the database is granted a server process with which to do work. In Linux systems, this process can actually be viewed at the server level with the ps -ef command. When the user connects over the network, the process will be labeled with LOCAL=NO in the process description. Privileged users such as database administrators can also make an internal connection to the database, provided that we are logging in from the server that hosts the database. When an internal connection is made, the process is labeled with LOCAL=YES. We see an example of each in the following screenshot of the ps –ef command on a Linux machine hosting Oracle:

Under ordinary circumstances, when a user properly disconnects his or her session from the database by exiting the tool used to connect to it, the server process given to that user terminates cleanly. However, what if instead of disconnecting the connection properly, the machine that the user was connected to was rebooted? In situations like these, the server process on the database is left running since it hasn't received the proper instructions to terminate. When this occurs, it is the job of PMON to monitor sessions and clean up orphaned processes. The PMON normally "wakes up" every 3 seconds to check these processes and clean them up. In addition to this primary function, PMON is also responsible for registering databases with network listeners.

The real-world DBA

Since the instance cannot run unless PMON is running, DBAs sometimes check for it using the ps command as a way of determining whether the instance is down, because, on Unix-based systems, we can actually see the processes at the server level using the command ps –ef | grep pmon. If a process is not returned, we know the instance is down.

SMON – the System Monitor

The SMON, or System Monitor process, has several very important duties. Chiefly SMON is responsible for instance recovery. Under normal circumstances, databases are shut down using the proper commands to do so. When this occurs, all of the various components, mainly the datafiles, are properly recorded and synchronized so that the database is left in a consistent state. However, if the database crashes for some reason (the database's host machine loses power, for instance), this synchronization cannot occur. When the database is restarted, it will begin from an inconsistent state. Every time the instance is started, SMON will check for these marks of synchronization. In a situation where the database is in an inconsistent state, SMON will perform instance recovery to resynchronize these inconsistencies. Once this is complete, the instance and database can open correctly. Unlike database recovery, where some data loss has occurred, instance recovery occurs without intervention from the DBA. It is an automatic process that is handled by SMON.

The SMON process is also responsible for various cleanup operations within the datafiles themselves. tempfiles are the files that hold the temporary data that is written when an overflow from certain memory caches occurs. This temporary data is written in the form of temporary segments within the tempfile. When this data is no longer needed, SMON is tasked with removing them. The SMON process can also coalesce data within datafiles, removing gaps, which allows the data to be stored more efficiently.

DBWn – the Database Writer process

For all of the overhead duties of processes such as PMON and SMON, we can probably intuit that there must be a process that actually reads and writes data from the datafiles. Until later versions, that process was named DBWR – the Database Writer process. The DBWR is responsible for reading and writing the data that services user operations, but it doesn't do it in the way that we might expect.

In Oracle, almost no operation is executed directly on the disk. The Oracle processing paradigm is to read data into memory, complete a given operation while the data is still in memory, and write it back to the disk. We will cover the reason for this in greater depth when we discuss memory caches, but for now let's simply say it is for performance reasons. Thus, the DBWR process will read a unit of data from the disk, called a database block, and place it into a specialized memory cache. If data is changed using an UPDATE statement, for instance, it is changed in memory. After some time, it is written back to the disk in its new state.

If we think about it, it should be obvious that the amount of reading and writing in a database would constitute a great deal of work for one single process. It is certainly possible that a single DBWR process would become overloaded and begin to affect performance. That's why, in more recent versions of Oracle, we have the ability to instantiate multiple database writer processes. So we can refer to DBWR as DBWn, where "n" is a given instantiation of a database writer process. If our instance is configured to spawn three database writers, they would be dbw0, dbw1, and dbw2. The number of the DBWn processes that are spawned is governed by one of our initialization parameters, namely, db_writer_processes.

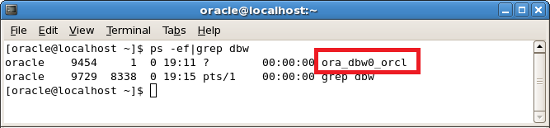

Let's take a closer look at how the value for db_writer_processes affects the database writer processes that we can see in the Linux operating system. We won't go into great depth with the commands that we'll be using at this point, but we can still see how the spawning of multiple DBWn processes works. We will become very familiar with commands such as these as we revisit them frequently throughout many of the examples in this book. First, let's examine the number of DBWn processes on our system using the ps command, with which we're familiar:

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at $19.99/month. Cancel anytime

From the Linux command line, we use the ps –ef command along with the grep command that searches through the processes in the system with the string dbw in their names. This restricts our output to only those processes that contain dbw, which will be the database writer processes. As we can see in the preceding screenshot, there is only one database writer process named ora_dbw0_orcl.

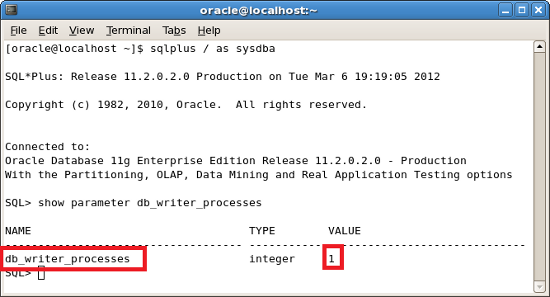

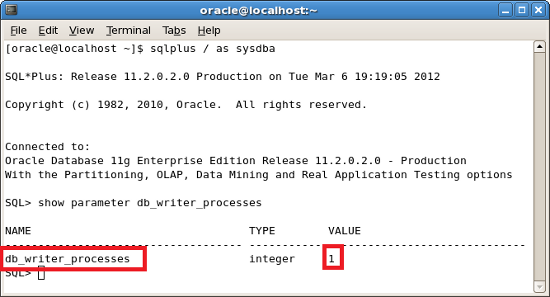

As mentioned, the number of the database writer processes is determined by an initialization parameter. The name of that parameter is db_writer_processes.We can determine the value of this parameter by logging into the database using SQL*Plus (the command sqlplus / as sysdba) and showing its value using the show parameter command, as in the following screenshot:

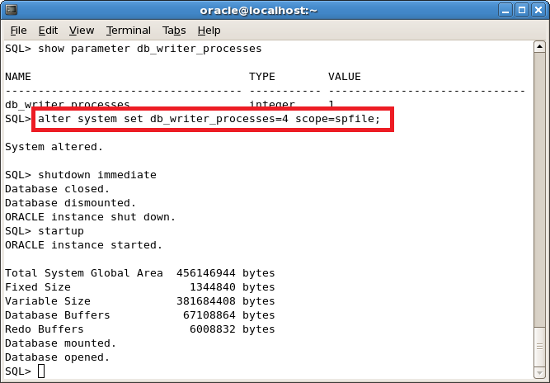

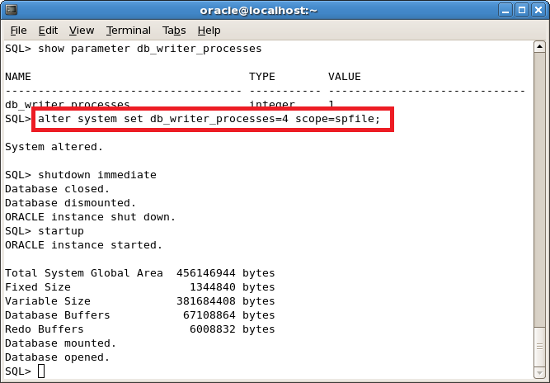

Since we've already determined that we only have a single dbw0 process, it should come as no surprise that the value for our parameter is 1. However, if we wish to add more database writers, it is simple to do so. From the SQL*Plus command line, we issue the following command, followed by the shutdown immediate and startup commands to shut down and start up the database:

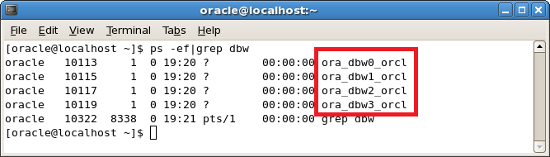

The alter system command instructs Oracle to set the db_writer_processes parameter to 4. The change is recognized when the database is restarted. From here, we type exit to leave SQL*Plus and return to the Linux command line. We then issue our ps command again and view the results:

As we can see in the preceding screenshot, there are four database writer processes, called ora_dbw0_orcl, ora_dbw1_orcl, ora_dbw2_orcl, and ora_dbw3_orcl, that align with our value for db_writer_processes. We now have four database writer processes with which to read and write data.

The real-world DBA

What's the optimal number of database writers? The answer is that, as with many aspects of database administration, it depends. The parameter has a maximum value of 20, so does that mean more is better? Not necessarily. The simplest answer is that the default value, either 1 or the integer value resulting from the number of CPUs divided by 8 (whichever is greater), will generally provide the best performance. Most opinions regarding best practices vary greatly and are usually based on the number of CPUs in the host box. Generally, the default value will serve you well unless your server is very large or heavy tuning is needed.

CKPT – the Checkpoint process

We mentioned in the preceding section that the purpose of the DBWn process is to move data in and out of memory. Once a block of data is moved into memory, it is referred to as a buffer. When a buffer in memory is changed using an UPDATE statement, for instance, it is called a dirty buffer. Dirty buffers can remain in memory for a time and are not automatically flushed to disk. The event that signals the writing of dirty buffers to disk is known as a checkpoint. The checkpoint ensures that memory is kept available for other new buffers and establishes a point for recovery. In earlier versions of Oracle, the type of checkpoint that occurred was known as a full checkpoint. This checkpoint will flush all dirty buffers back to the datafiles on the disk. While full checkpoints represent a complete flush of the dirty buffers, they are expensive in terms of performance. Since Version 8i, the Oracle kernel makes use of an incremental checkpoint that intelligently flushes only part of the available dirty buffers when needed. Full checkpoints only occur now during a shutdown of the database or on demand, using a command.

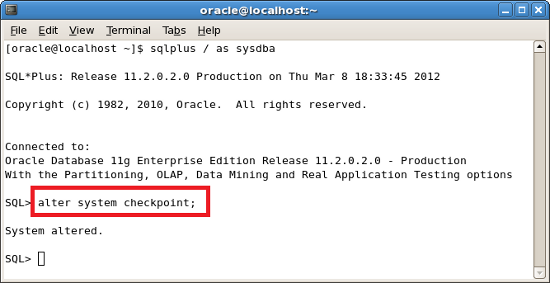

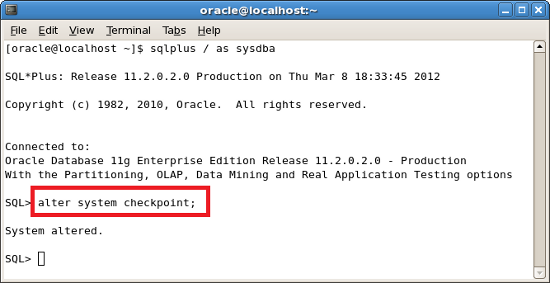

The process in the instance that orchestrates checkpointing is the CKPT process. The CKPT process uses incremental checkpoints at regular intervals to ensure that dirty buffers are written out and any changes recorded in the redo logs are kept consistent for recovery purposes. Unlike the DBWn process, there is only one CKPT process. Although the incremental checkpoint method is used by CKPT, we can also force a full checkpoint using the command shown in the following screenshot:

LGWR – the Log Writer process

Redo logs are files that serially store the changes that occur in the database. These changes can be anything from INSERT, DELETE, or UPDATE statements executed against the database to the creation of new tables. Note, however, that queries against the database using SELECT statements do not constitute changes and are not recorded in the redo logs. The primary function of redo logs is to act during database recovery, where database changes can be "rolled forward" from a backup.

When a change occurs in the database, that change is first written into a memory buffer called the log buffer that is specifically tasked with handling database changes. It is then written into the redo logs. The process that writes changes from memory to disk is the LGWR process. There is only one LGWR process, so it is important that it moves data as efficiently as possible. There are three conditions that can occur to cause LGWR to move changes from memory into the redo logfiles:

The last of these conditions, "every three seconds," is actually caused by the DBWn process. The database writer has a three-second timeout before it must write a limited number of dirty buffers to disk. When this occurs, the LGWR process also flushes its changes just before DBWn does its work. This ensures that uncommitted transactions can always be rolled back.

ARCn – the Archiver process

The ARCH, or the Archiver process, is an optional but very important process. Let's continue describing the life cycle of a change in Oracle. To review, as sessions change data, those changes are written into the log buffer. At periodic intervals, those changes are written out serially to the redo logs by the LGWR process. The number and size of the redo logs can vary, but there are always a limited number of them. When one redo log becomes full, LGWR switches to the next one. Since there are a finite number of redo logs, LGWR eventually fills them all. When that happens, LGWR switches back to the original redo log and overwrites the changes that exist in the log. This effectively destroys those changes and invalidates the purpose of storing the changes at all. When the database operates in this manner, overwriting changes that were stored in the redo logs, we say that we're operating in NOARCHIVELOG mode. In order to prevent changes from being overwritten, we must operate using a different mode—ARCHIVELOG mode. When the database is in ARCHIVELOG mode, the contents of the redo logs are written out to a different type of file called archive logs. Archive logs simply contain the data that was in the redo log and serve as its static copy. However, archive logs never overwrite each other. Thus, the history of changes stored in the archive logs and redo logs constitute all the changes that have occurred from a given point in time. The process that enables ARCHIVELOG mode is the Archiver process, or ARCH. The ARCH copies data from the redo logs to the archive logs and does so before the data in the redo logs can be overwritten.

Like DBWn, the Archiver process can run as multiple processes, albeit with a different purpose. In previous versions of Oracle, the Archiver process ran as a single process— ARCH. In recent versions, it is more accurate to call the Archiver process by its proper name, ARCn, where "n" is the number for one of multiple Archiver processes. When ARCn runs as multiple processes, it does so in order to write to multiple locations, rather than using multiple processes to write to a single location.

Since, along with the redo logs, the archive logs contain all of the changes that have occurred in the database, they are critical to database recovery. As such, we can configure our database to write out archive log copies to more than one location. For instance, we may want to configure the Archiver process to write archive logs to two different locations on disk or write a copy of them out to a tape drive to mitigate the risk of data loss.

United States

United States

Great Britain

Great Britain

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

Singapore

Singapore

Canary Islands

Canary Islands

Hungary

Hungary

Ukraine

Ukraine

Luxembourg

Luxembourg

Estonia

Estonia

Lithuania

Lithuania

South Korea

South Korea

Turkey

Turkey

Switzerland

Switzerland

Colombia

Colombia

Taiwan

Taiwan

Chile

Chile

Norway

Norway

Ecuador

Ecuador

Indonesia

Indonesia

New Zealand

New Zealand

Cyprus

Cyprus

Denmark

Denmark

Finland

Finland

Poland

Poland

Malta

Malta

Czechia

Czechia

Austria

Austria

Sweden

Sweden

Italy

Italy

Egypt

Egypt

Belgium

Belgium

Portugal

Portugal

Slovenia

Slovenia

Ireland

Ireland

Romania

Romania

Greece

Greece

Argentina

Argentina

Netherlands

Netherlands

Bulgaria

Bulgaria

Latvia

Latvia

South Africa

South Africa

Malaysia

Malaysia

Japan

Japan

Slovakia

Slovakia

Philippines

Philippines

Mexico

Mexico

Thailand

Thailand